Toca Boca + AppFollow: 75% review reply rate with automation

Table of Content:

Toca Boca creates digital play spaces for kids. Their biggest product, Toca Boca World, hits 60 million + monthly active users. That kind of scale brings a specific problem: how do you handle 75,000 reviews every month without hiring an army?

In 2022 and 2023, their manual reply strategy wasn't scaling well, and there was a risk that players would feel ignored. Negative reviews kept climbing from 21% to 25%, and something had to change.

Jonny Hair, Senior Customer Experience Manager at Toca Boca, and William Smith, LiveOps Marketing Manager, led the project to rethink how they handle app store feedback completely. Their results speak for themselves: 109% increase in reply rate during 2025, 498% more reviews replied to year over year, and 26,700 stars added to ratings in the second half of 2025.

This is such a tremendously positive story, we couldn’t help but share it in this format too. In case you'd like to see it in our standard case study format, see it here. Enjoy!

The problems they faced

Before automation, Toca Boca's review management was not ready for the sheer success they were experiencing. Manual replies don't scale when you're getting thousands of reviews weekly, but the bigger issue was what happened when they did reply.

As with any app, technical problems happen. When support agents replied to complaints about bugs, they could only offer partial solutions. Players would update their reviews to drop the rating even lower. The reply effect was negative 0.3 stars, and just about every response was making things worse.

The platforms themselves offered no help. Google Play and App Store consoles don't give you detailed data on what happens after you reply. You can't easily see which responses help and which hurt, you can't track patterns across thousands of reviews, and you definitely can't automate anything beyond the most basic keyword matching.

For Toca Boca, this created a hypothetical but tough question: “If responding to reviews is actively damaging our rating, should we even reply?”

Why they couldn't just stop responding

App stores care about engagement. Platforms look at whether developers respond to reviews when making featuring decisions. For kids and tweens, the app store is often the first place they can leave a review as consumers, and they expect companies to listen.

Toca Boca's brand is built on creating safe, playful spaces where kids feel heard. Ignoring 75,000+ monthly reviews contradicted everything they stood for, and so they needed to find a way to respond at scale while helping players instead of making them angrier.

Four pillars of their approach

Fully commit to automation

The biggest shift was going all in on reaching reply rate by building systems to handle the majority of feedback automatically. This was risky; what if automation made the negative reply effect even worse?

Thus, they decided to treat each new automation as an experiment. Test it, monitor closely, adjust based on results. Some automations would get A/B tested with different copy and approaches to see what worked best.

Granular monitoring

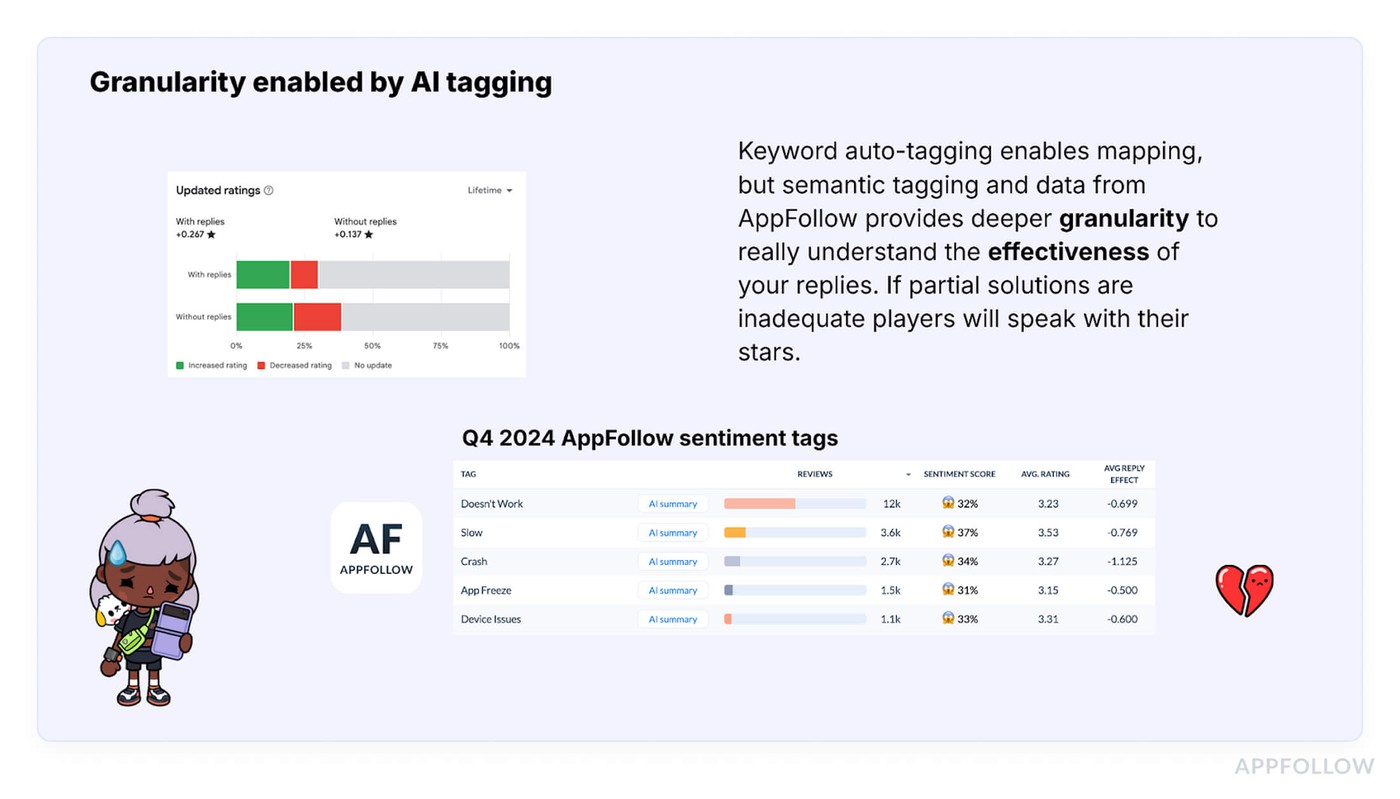

You can't improve what you don't measure. The team set up detailed tracking for every automation they launched. Reply rate and reply effect needed to go hand in hand. If an automation started hurting ratings, they'd know immediately and could pull it back.

High accuracy tagging

Generic keyword matching wasn't good enough. Toca Boca needed to identify issues with high confidence before automating responses. This meant building out tagging systems, using AppFollow's semantic tags and AI summaries to understand what players were complaining about.

Some automations went through approval mode first, where a human reviewed AI-generated responses before sending. Only after proving the automation worked reliably would they let it run on its own.

Quality assurance process

The team shifted agent work from manually typing responses to reviewing automation performance. Agents would check batches of automated replies, looking for cases where tagging missed the mark or responses didn't quite fit. This feedback loop kept improving both the tagging and the reply templates.

The tools they used

Auto-tags and semantic analysis

AppFollow's tagging system became the foundation. Keyword tracking helped map common issues. Semantic tagging used AI to understand sentiment and context beyond simple word matching. This gave them the granularity native app store tools couldn't provide.

When 500 reviews mention crashes, keyword tags catch that. When players write "this used to be my favorite game but now it's terrible," semantic analysis understands that's negative sentiment about a change, not just the word "favorite."

Templates

Toca Boca has strict tone of voice guidelines. They needed automation that didn't sound robotic but also didn't require human writing for every response. The reply kit feature let them create template folders for different scenarios.

Each automation could pull from multiple template variations, cycling through them to avoid repetitive responses. This maintained their brand voice while scaling responses from hundreds to tens of thousands monthly.

AI Summaries

When negative sentiment spiked, AI summaries helped the team understand why quickly. Instead of reading through 200 individual reviews to spot a pattern, they'd get a summary showing "43 reviews mention access problems, 28 mention crashes on Samsung devices, 15 want refunds."

This speed mattered for catching issues before they spiraled. If a new update broke something, they'd know within hours instead of days.

Integration with support resources

Reviews often escalated to support tickets. Rather than keeping these channels separate, they integrated AppFollow with their support system. Reviews about complex issues could be routed appropriately. Generic automations could link directly to relevant help articles.

Results by quarter

- Q1-Q2: Target was 40% reply rate with neutral reply effect. They hit 43.08% reply rate with +0.01 reply effect. The team focused on targeting major pain points through improved tagging. They built out their core automation systems and established the monitoring processes they'd rely on later. Progress was steady but modest, exactly what they planned for this phase.

- Q3: Target was 60% reply rate with +0.1 reply effect. They achieved 66.05% reply rate with +0.06 reply effect. The original roadmap shifted here. Instead of getting the planned feature improvements, the team pivoted to addressing the free player experience. This had been tricky before. Through iteration of copy and adding better qualifiers to their automations, they found approaches that worked.

- Q4: Target was 70% reply rate with +0.2 reply effect. They reached 71.76% reply rate with +0.15 reply effect.

The team identified blind spots where the nature of their young audience made issues hard to categorize. Their solution was more generic automations that directed players to support resources regardless of the problem.

Key lessons learned

Inconclusive data isn't useless

Early on, they only automated reviews where they could identify the exact issue with high confidence. This limited how many reviews they could handle, and then they realized inconclusive data still had value.

For reviews they couldn't categorize precisely, they created general responses directing players to support resources. This let Toca Boca cast a wider net, with universal 1-star automation that in Q4 replied to 13,800 reviews with a +1.71 reply effect. Players just needed acknowledgment and a path to help, even if the response wasn't perfectly tailored.

Templates are never finished

The team kept iterating on reply templates throughout the year. What worked in Q1 might not work in Q3. Player sentiment changes, new issues emerge, old problems get fixed. You need continuous refinement based on reply effect data kept their automations effective.

They also used AI rephrasing within templates to test out copy to prevent repetition while staying on brand, which offers variety without needing dozens of hand-written versions of each response.

Quality assurance beats manual replies

It's more productive to have agents review automated responses than have them manually type replies. The agents could cover far more ground checking automation performance and catching edge cases where tagging failed, or responses missed the mark.

This quality assurance process maintained the accuracy they needed while letting automation handle the volume they couldn't manage manually.

Put yourself in the player's shoes

When directing players to support resources, generic landing pages overwhelmed them. Too many articles, unclear where to start. Instead, linking to specific relevant articles made players feel like the help was designed for them.

Small details like this improved how players responded to automated replies.

The right vendor matters

Jonny Hair noted that AppFollow went beyond being a typical software vendor. When they needed data or features to support their strategy, AppFollow worked with them proactively instead of saying "we'll add that to the feature request list for six months from now."

This partnership approach helped them move faster and get more granular insights than the platform offered out of the box.

What they achieved

By the end of 2025, the results were clear:

- 109% increase in reply rate from where they started

- +0.45 improvement in reply effect

- 498% increase in total reviews replied to year over year

- 26,700 stars added to ratings after review replies in the second half of the year

- App Store rating improved from 3.9 to 4.1 stars

- Google Play rating moved from 4.2 to 4.3 stars

More importantly, Toca Boca proved you could scale review responses to tens of thousands monthly while improving ratings instead of damaging them. The key was treating automation as a system that needed constant monitoring and iteration, not something you set and forget.

What's next for Toca Boca

The team is exploring the feasibility of AI-generated replies as their next step. Now that there are strong foundations with tagging, templates, and quality assurance, AI replies could handle even more edge cases while maintaining quality.

They're also looking at building out their knowledge base to work better with automated responses. When you're directing players to help resources at scale, those resources need to be comprehensive and easy to navigate.

The approach they've developed, letting automation handle volume while humans focus on quality assurance and iteration, gives them room to keep growing without proportionally growing their team.

How you can apply this

Your app probably isn't getting 75,000 reviews monthly. But the principles Toca Boca used work at any scale:

- Start with comprehensive tagging. You need to understand what players are saying before you can automate responses effectively.

- Treat automations as experiments and launch them carefully, monitor performance, iterate based on data. Don't automate everything at once.

- Track reply effect religiously. If your responses aren't helping players or improving ratings, you need to know immediately so you can adjust.

- Build quality assurance into your process and have humans review automation performance regularly to catch issues.

- Don't let inconclusive data block you. Sometimes, a generic helpful response is better than no response. Give players a path forward even when you can't perfectly categorize their issue.

- Reply templates, tagging rules, and automation strategies all need continuous refinement as your app and player base evolve.

The tools exist to handle massive review volumes without sacrificing quality. You just need to approach automation strategically instead of hoping technology alone will solve the problem. While you’re at it, we recommend to learn more about automating your app reputation management and how automated review management improves customer engagement.

Good luck, and thank you, Toca Boca, for this wonderful case study and experience working together!

FAQs

What is the reply effect and why does it matter for mobile game reviews?

Reply effect measures whether your review responses change user ratings. When players update their rating after you respond, that change (positive or negative) is your reply effect. For gaming apps, this metric matters because it shows if your support efforts are working or backfiring. A positive reply effect means your responses convince unhappy players to raise their ratings, directly improving your app store presence.

How can gaming companies automate app store reviews without sounding robotic?

Gaming companies can maintain authentic communication at scale by using template systems with multiple variations, AI rephrasing tools that preserve brand voice while preventing repetition, and quality assurance processes where humans review automation performance.

What metrics should mobile game developers track for review management?

Game developers should track reply rate (percentage of reviews receiving responses), reply effect (average rating change after developer response), response speed (time from review to reply), and sentiment trends over time.