AppFollow 2025 product updates and what's coming next

Table of Content:

On December 3rd, we held a webinar titled "AppFollow 2025 in Review." The idea was to look back at what we shipped this year and talk about why we built it. The short answer is that most of it came from our dear clients! Customer feedback, feature requests, support tickets, Slack conversations. We tracked all of it and tried to turn it into product improvements.

Apart from our team, we had a special guest from Opera who shared how her team uses AppFollow to catch bugs and track feature requests.

Here's what we covered and what's coming next.

We also recommend learning more by watching the recap here:

The numbers behind 2025

Before getting into features, some context on where we're at.

We track tens of thousands of apps now. It could be your own apps or competitors you're monitoring. Reviews are still the primary thing we analyze, and the volume keeps growing.

The automation stat that stood out is that 50% of replies are now automated. That's up 10% from last year. People are trusting AI to handle more of their review responses. We think that number will keep climbing over the next couple of years, but there's always going to be cases where human responses matter.

We also doubled the number of customer calls this year. Product team, success team, support team. Everyone's been jumping on calls to hear feedback directly. That's how we figure out what to prioritize.

The hours saved calculation is a bit fuzzy, but we estimate that AI features have saved customers a significant amount of time. Summarization, categorization, and automated replies; it adds up.

We’ve also processed 10B tokens through OpenAI for our AI-enabled features, and got a nice plaque for it!

AI as a support agent

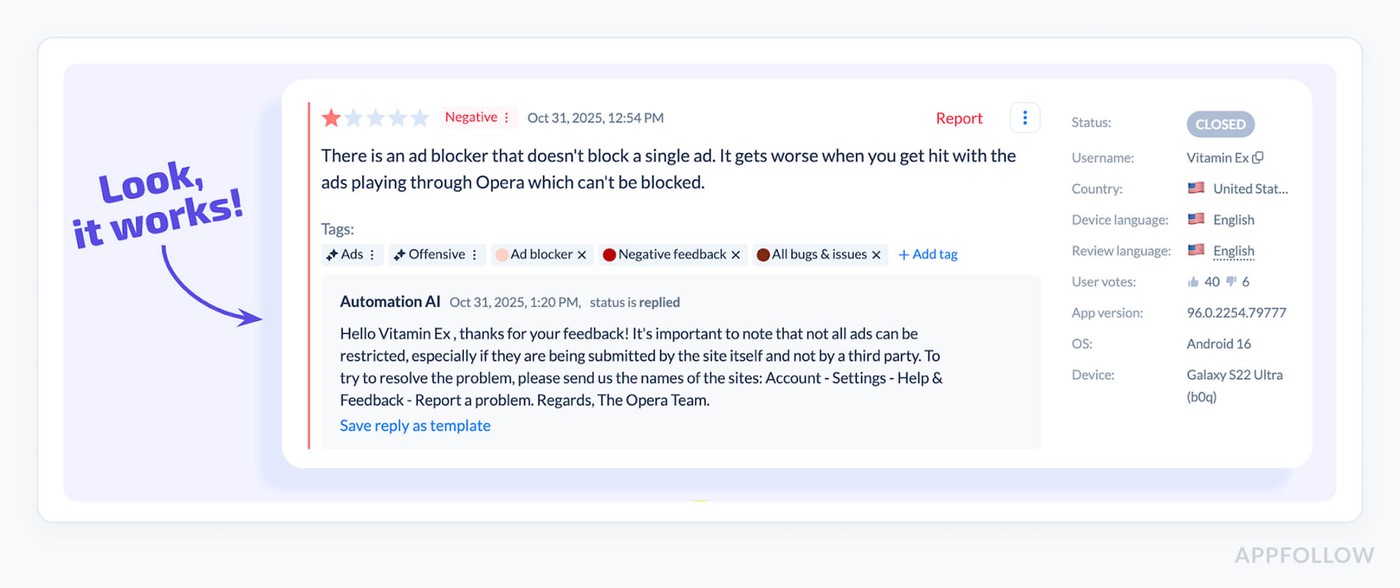

The biggest update on the support side is how we've been treating AI automation. We stopped thinking of it as just a feature and started treating it like a support agent. It greets customers, provides helpful information, follows your tone guidelines, and solves problems.

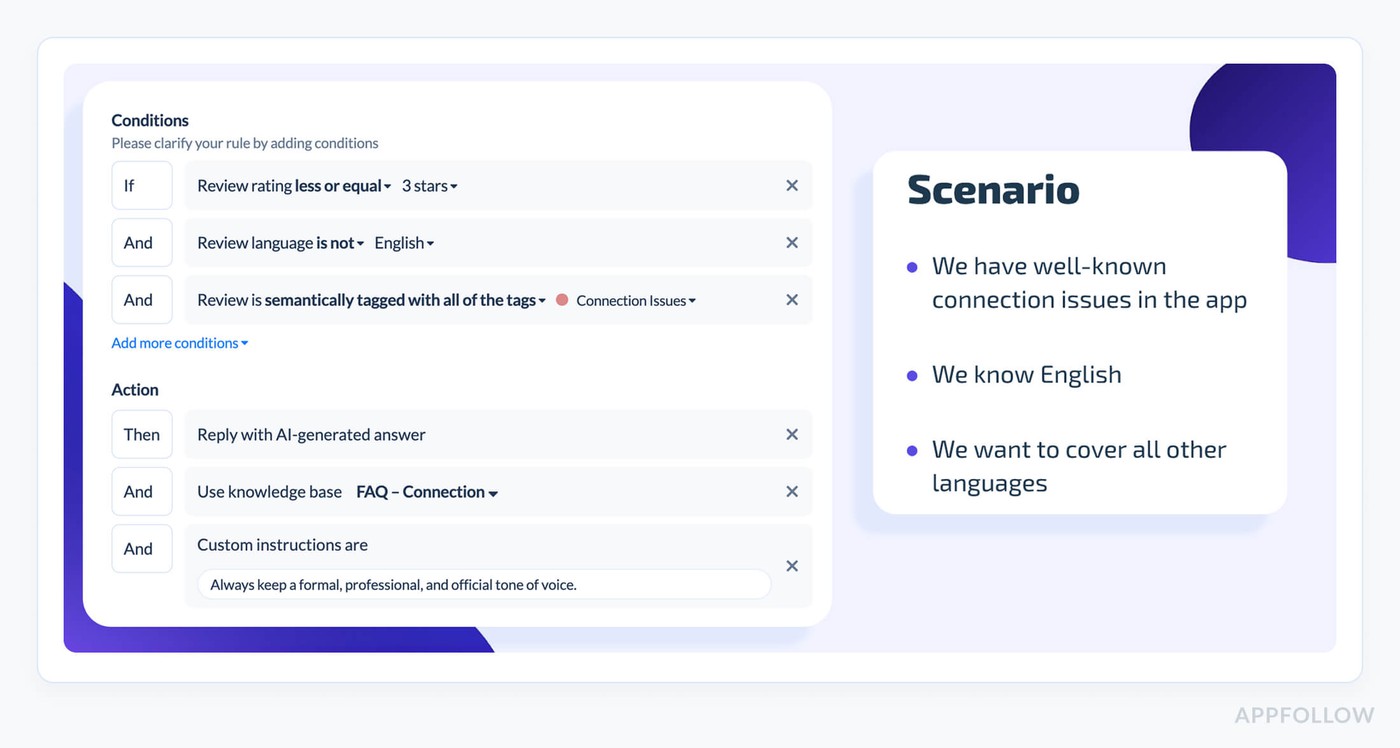

Say you have a known connection issue in your app. You've got help center articles about it. You usually handle English reviews yourself, but your app is global. You need to support other languages too.

You can set up automation to handle non-English reviews about connection issues. You tell it to use a formal tone, point it to your knowledge base, and let it figure out how to help. If someone in Germany writes about connection problems, the AI reads your FAQ, finds relevant troubleshooting steps, and writes a response that addresses the issue.

This works because we let you train it on your own documentation, with responses that aren't generic. They pull from what you've already written.

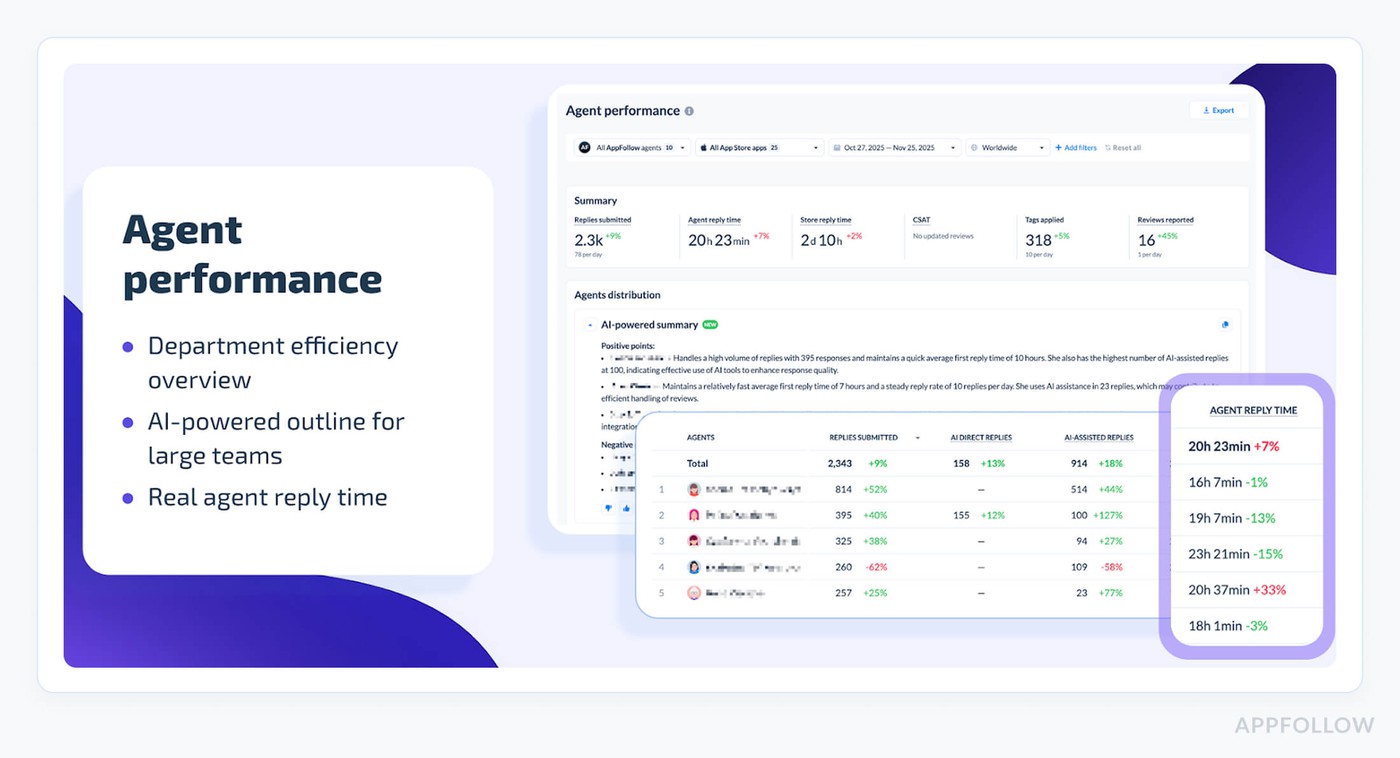

Agent performance tracking

Once you have AI handling reviews alongside human agents, you need to compare them.

We built an agent performance dashboard that treats AI automation as just another team member. You can see reply rates, response times, and efficiency metrics for everyone, including the bot.

There's an AI summary on this page, too. If you have dozens of agents, you don't want to check each one manually. The summary highlights what you need to know about department efficiency.

One thing we added recently is real agent reply time. Here's the problem. Review moderation in app stores takes time. A customer might not see your response for a day or two, even if your agent replied in 15 minutes. We now show the time the agent took to respond, not the time until it appeared in the store.

For AI automation, this is basically instant - a few seconds. That's why we keep pushing automation. On Google Play, especially, customers can get a response within minutes of leaving a review. If someone posts a frustrated one-star review and gets a helpful response right away, that changes things.

Reporting got better

We spent a lot of time on reporting this year. The home hub now shows five key metrics for review management. Average rating, reply rate, store reply time, after reply rate, and tag rate. You can see where you stand compared to benchmarks and figure out what needs work.

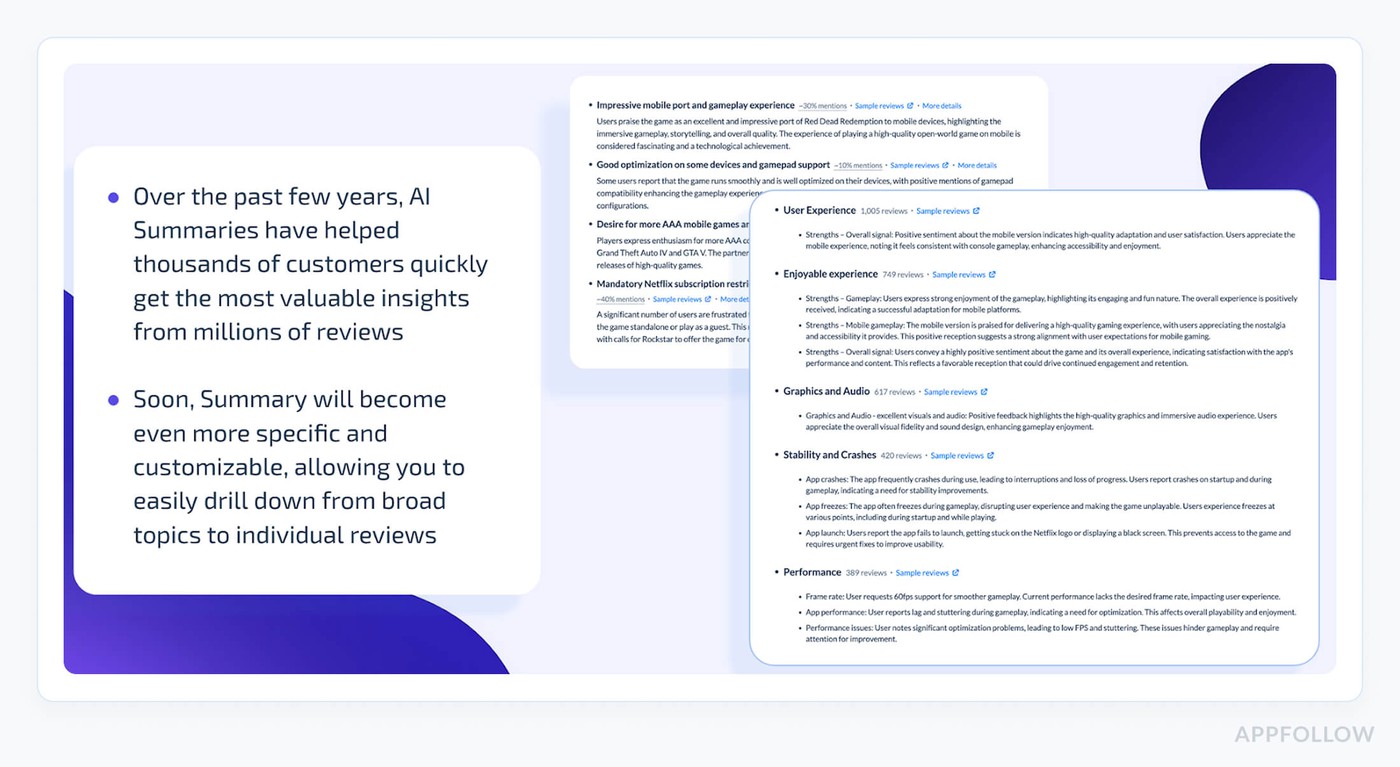

AI Summary was something we launched last year, and we've been improving it. The big addition is period comparison. You can see how things changed from one month to the next instead of just looking at a snapshot.

We're also working on a new version that goes deeper. The current summary gives a high-level overview, but customers told us it's not detailed enough sometimes. The next version will have more specific topics with examples. That's in closed beta now and should roll out early next year.

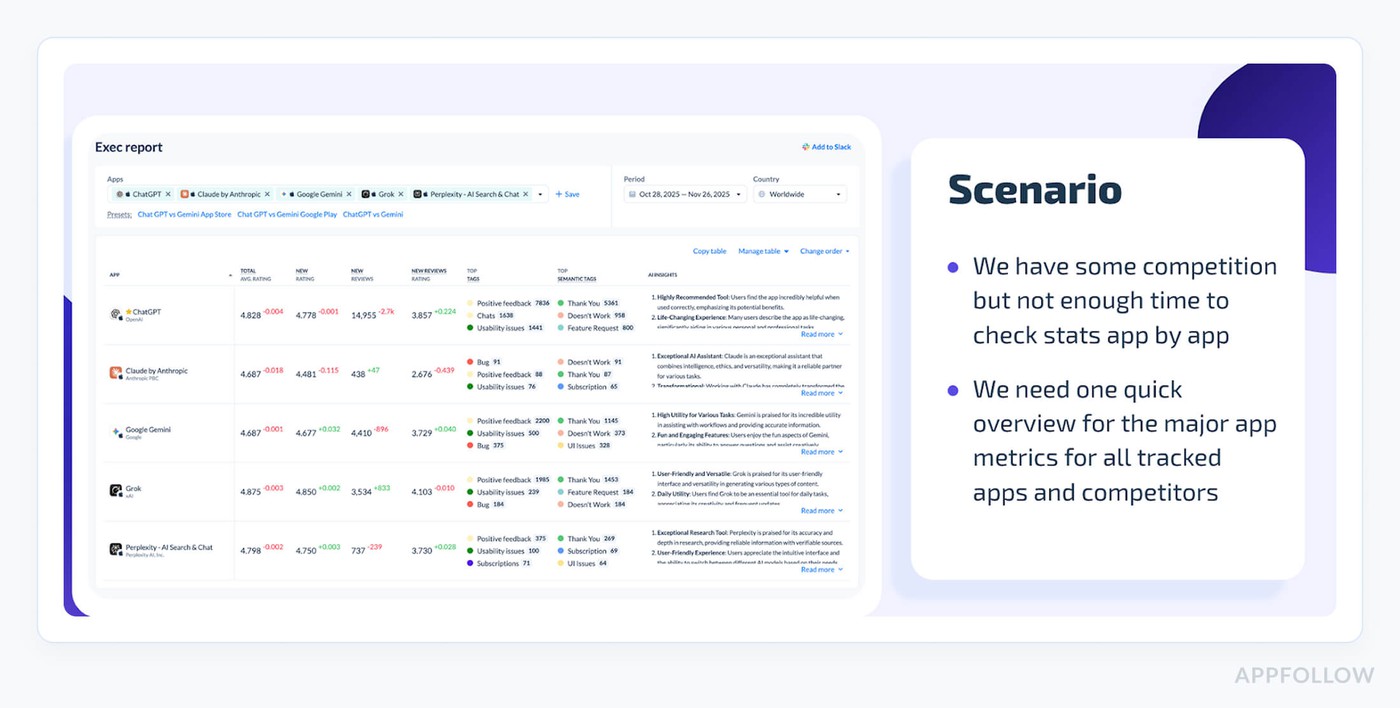

The exec report is another thing people have been using a lot. It shows trends across all the apps you track, yours and competitors. Useful for monthly or quarterly check-ins when you don't have time to dig into daily data. We're planning to add breakdowns by tags and countries based on feedback.

Slack integration for exec reports

The exec report also works in Slack now. We send weekly summaries with separate sections for positive reviews, negative reviews, new issues, and example reviews. Our guest from Opera mentioned that this is now part of their routine. Every week, they get a summary dropped into Slack and can immediately spot if something's wrong.

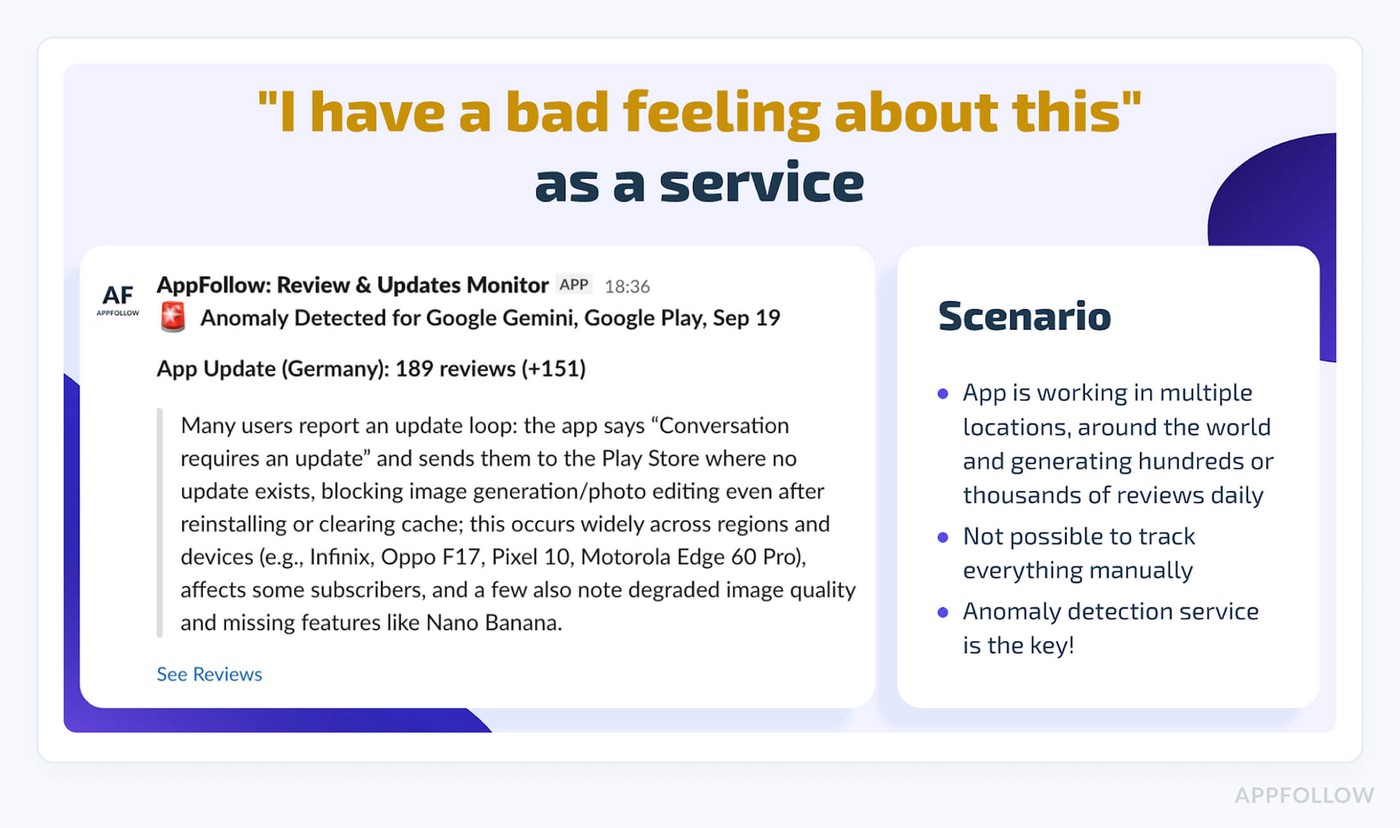

We're also working on anomaly detection. The idea is to automatically flag spikes in negative feedback and summarize what's happening. If you suddenly get a bunch of complaints about an app update in a specific country, you'll get notified with context about what users are saying. This is in closed beta, too.

New data sources

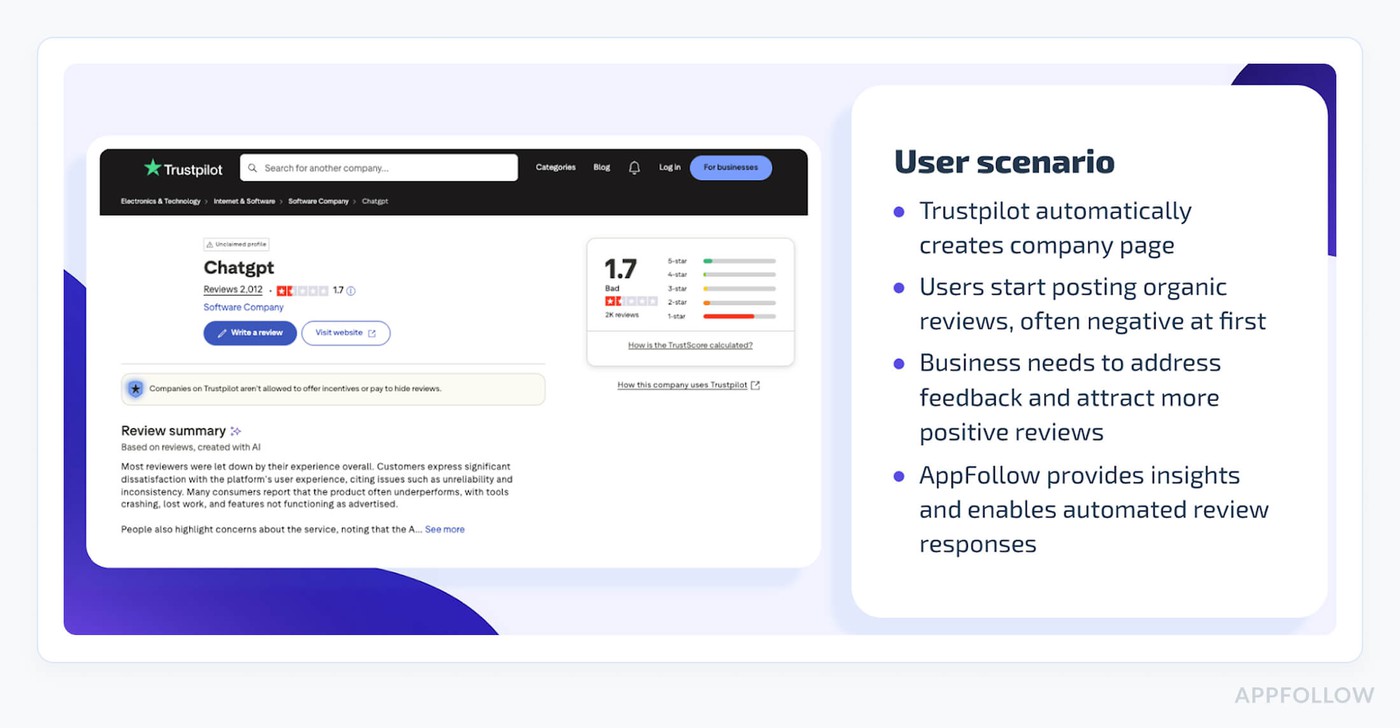

Trustpilot is the big one. It's our first non-app store data source. You can collect reviews and ratings, respond to reviews, set up automation, use semantic tags, and run AI summaries. All the same stuff you do for app stores.

Here's something worth knowing. TrustPilot pages can exist for your company without you creating them. Users or the platform can set them up automatically. So you might have a TrustPilot presence you don't even know about. And if you're not managing it, the organic reviews tend to be negative because people generally write bad things online when they're not prompted.

We improved support for secondary stores like Samsung and Huawei. The feature coverage wasn't as good as App Store and Google Play. We've been evening that out. Alerts work for Samsung now, Zendesk integration is available, and we completely rebuilt the Huawei review feed.

For next year, we're focused on Steam, Facebook, and Discord. We have prototypes, and we're gathering feedback. Gaming companies, especially, have been asking for Steam. We built an API method that lets you upload review data from any source into AppFollow. That just launched.

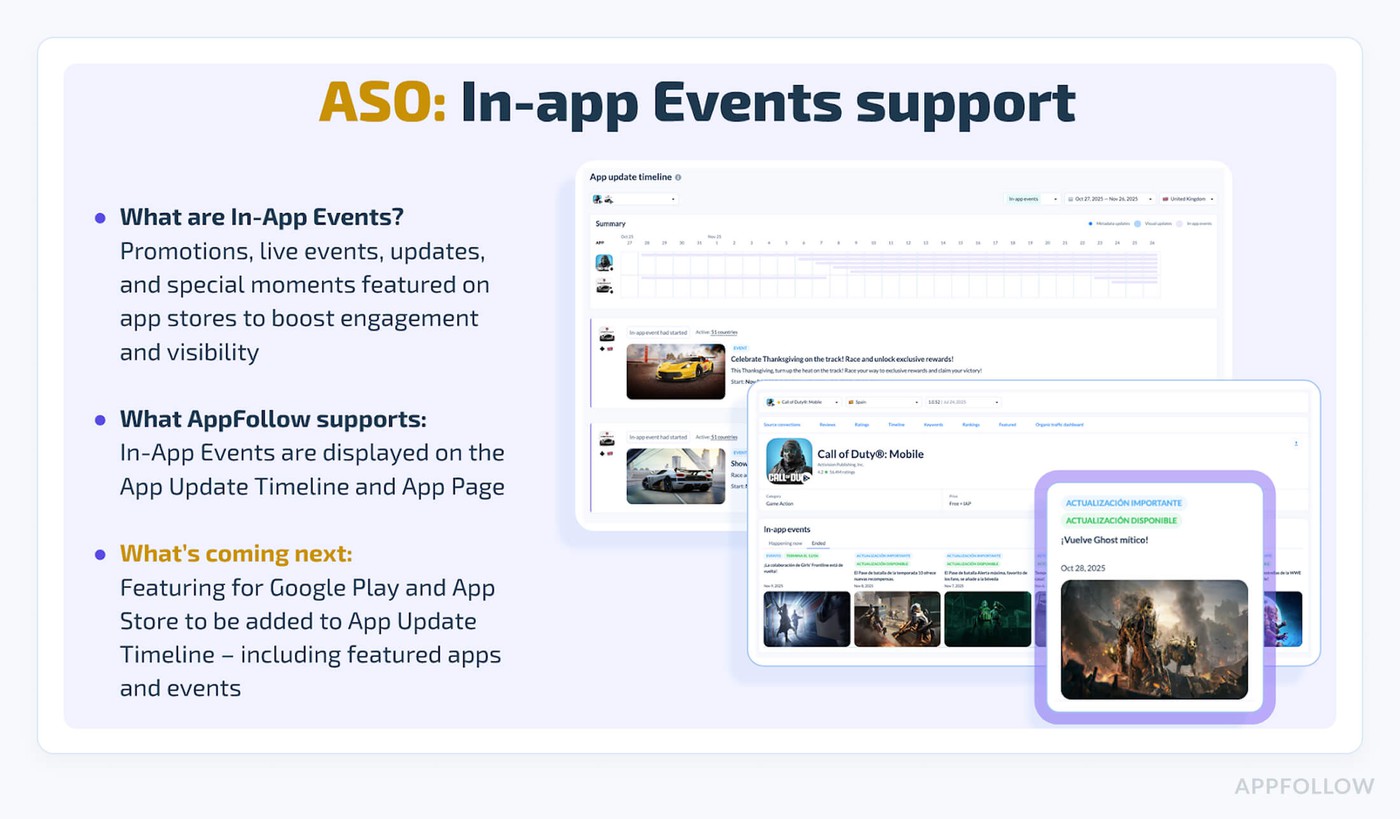

ASO updates

We started tracking in-app events this year. These show up on the app update timeline alongside other changes. You can see when an event went live, when it got featured, and when it ended. This works for your apps and competitors.

The next step is featuring tracking for events and apps. You'll be able to see which types of in-app events tend to get featured and use that to inform your strategy.

We're investing more in ASO overall. Custom product pages support is coming. More reporting features, too. If you have ASO feature requests, now's a good time to vote on them in our feedback system. There aren't as many votes in that area yet, so your input carries more weight.

Pricing changes

We made a conscious decision this year to go against the trend of inflating prices and upselling. Instead, we moved features down to lower-tier plans.

Automation is now available on all plans. Same with semantic and sentiment tags. AI features are part of every plan, too. We wanted people to be able to use the powerful stuff before they reach enterprise scale.

For features we couldn't include in lower tiers, we introduced add-ons. If you need something from a higher plan but you're not ready to upgrade, you can unlock individual features at lower prices.

We also launched a dedicated ASO plan for indie developers and small companies who mainly need the visibility and keyword tracking tools.

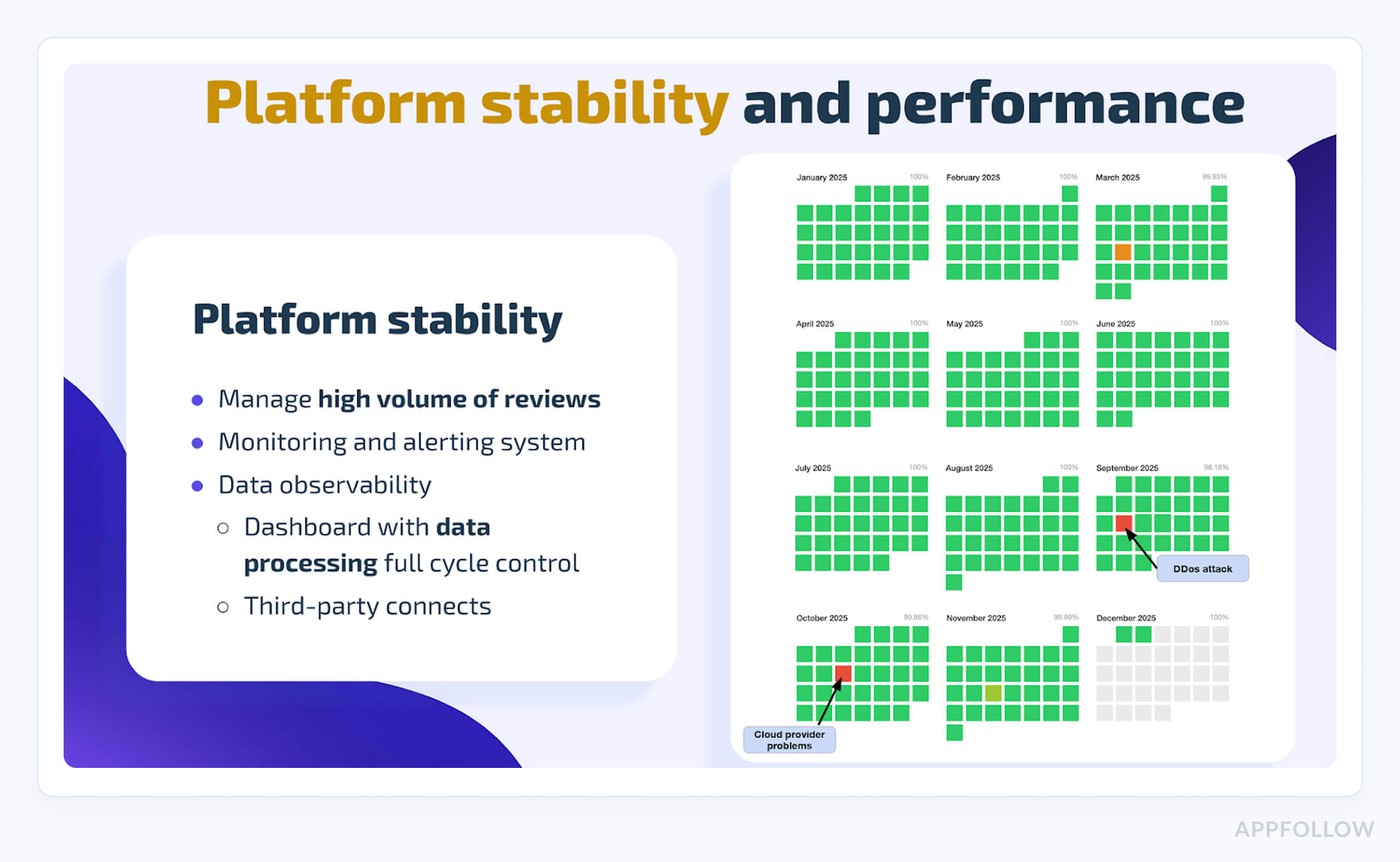

Stability and security

We have ISO 27001 certification for the second year in a row. This covers how we handle data, onboard employees, run penetration tests, and manage information security overall. Enterprise customers care about this stuff.

We have a status page where we post about incidents and share postmortems. When customers report issues, we add them there. The engineering, support, and product teams all stay in the loop on incidents and figure out how to prevent them.

We rebuilt the automation system this year because the old one was hitting its limits. The new version handles higher load and scales better. Important because auto-reply timing matters. If it takes too long to respond, you lose the benefit of quick engagement.

On AI specifically, we added documentation explaining how we use it and confirming we don't train models on customer data. Security teams at larger companies ask about this.

Voice of the customer with Opera

Miyuki Barsk, Mobile Customer Support Lead at Opera, joined us as our special guest. Her team manages app reviews and bug reports, tests user-reported bugs before escalating to developers, and sorts through feature requests to find the important ones.

She said the weekly exec report in Slack has become part of their routine. The new issues section is especially useful. She shared an example where users were reporting a startup problem they thought was fixed two releases ago. That kind of thing gets lost in the noise of thousands of reviews. The exec report surfaced it.

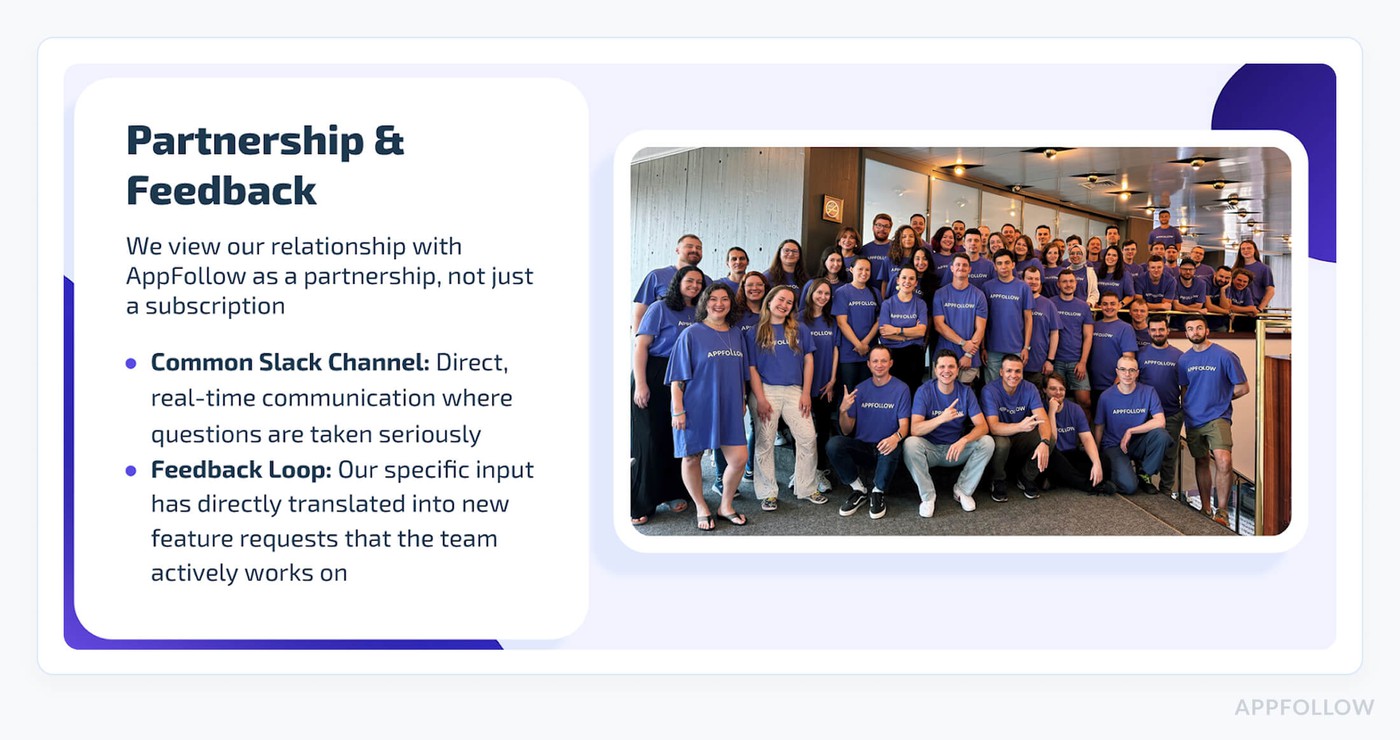

She also mentioned the feedback loop with our team. When she asks questions in Slack, we take them seriously. Some of her input turned into feature requests we're actively working on.

Her team uses AppFollow in two ways. First, as an extra QA layer to catch regressions. They find bugs in the wild that automated tests miss. Second, to analyze feature requests and understand what users want versus what the team assumes they want.

Case studies

A few customer examples worth mentioning.

- Gameloft saw a 62% increase in review ratings after responding to reviews through AppFollow. They decreased response time from 30 days to 3 days. Their reply rate doubled.

- Huuuge Games automated 80% of their review tagging and 50% of their responses. Their app rating stays stable at 4+ stars.

- Mytona hit a 100% reply rate within a year. All reviews are auto-tagged. They respond to about 3,000 reviews monthly across multiple stores and languages.

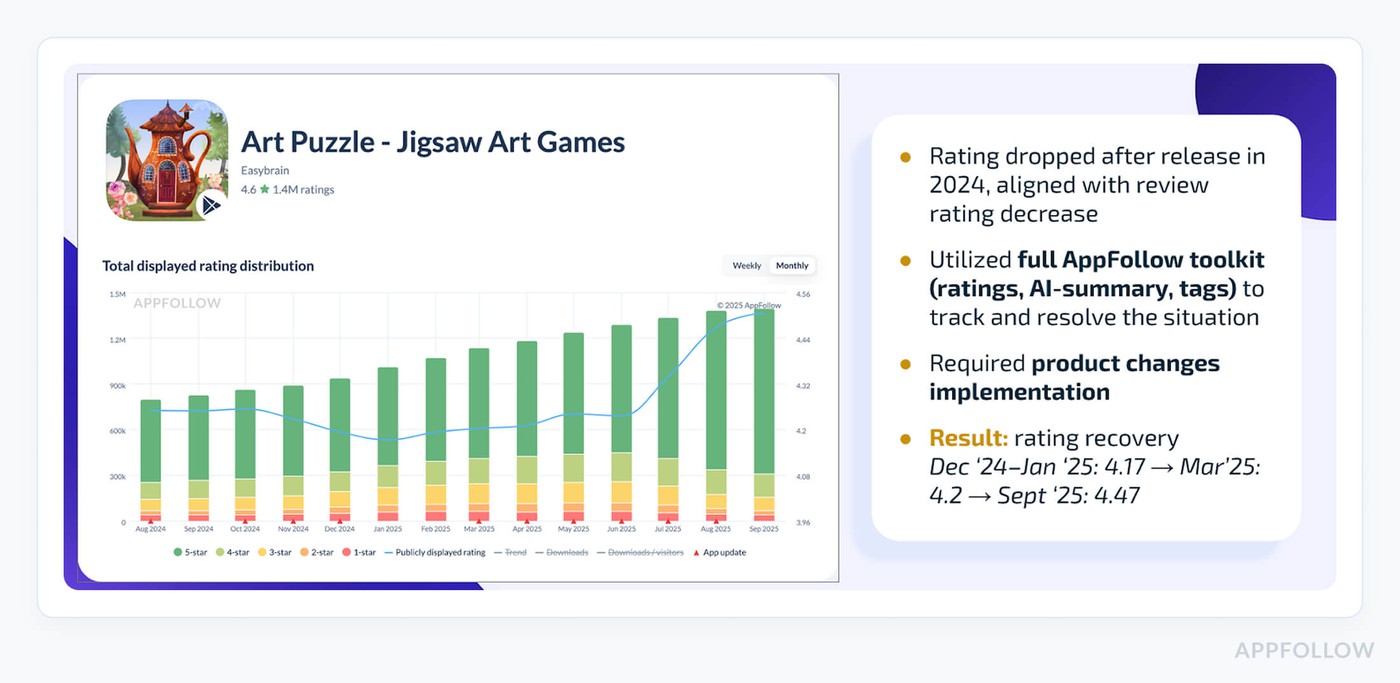

There was also a case study from Easybrain about rating recovery. An app's rating dropped after its release in 2024. They used the full toolkit, including ratings tracking, AI summary, and tags, to identify and fix the issues. The rating went from 4.17 in December to 4.47 by September.

What's coming in 2026

We'll share a public roadmap around February. But roughly the focus areas are better reporting for leadership teams including benchmark comparisons, more Slack and email integrations for alerts and exec reports, improved automation quality with better AI replies, better categorization through semantic topics, more data sources to give you a full view of customer feedback, and ASO features like custom product pages support.

The dream is a big button that just makes everything good. We're working on it.

If there is anything at all you’d like to see in AppFollow, cast your vote here, and we’ll try and bring it to you in 2026.

FAQ

What is AppFollow used for?

AppFollow is a platform for managing app reputation. You can track ratings and reviews across app stores, respond to users, automate replies, and analyze feedback to find product insights. Support teams use it to handle review responses faster. Product teams use it to understand what users are asking for. Marketing teams use the ASO tools to improve visibility.

Does AppFollow support automation for review responses?

Yes. You can set up rules based on rating, language, topic, and other filters. The AI automation learns from your knowledge base and follows your tone guidelines. It can handle straightforward issues automatically while flagging complex ones for human review. Automation is available on all plans now.

What data sources does AppFollow support?

App Store and Google Play have full feature coverage. Secondary stores like Samsung, Huawei, Amazon, and Galaxy Store are supported with growing feature sets. TrustPilot is the first non-app store source with full support. Steam, Discord, and Facebook are in development. There's also an API for uploading reviews from custom sources.

How does AppFollow use AI?

AI powers several features. There's AI Summary for analyzing review trends over time. Semantic tags automatically categorize reviews by topic and sentiment. AI automation generates responses based on your guidelines and knowledge base. The agent performance dashboard uses AI to summarize department efficiency. AppFollow does not train AI models on customer data.